Why do we have inventories?

All consumer goods, such as soap or food, even household appliances, you know what I mean, all these products are in inventories. We are used to thinking about store inventories, but there are also inventories in distribution centers and even factory warehouses for finished products.

This inventory has a unique feature: it was purchased, shipped, or manufactured before a consumer ordered it. It is that inventory is necessary only when the customer's tolerance to wait is less than the time it takes to make it available, within the customer's reach. If customers are willing to wait as long as or longer than it takes for the product to arrive, no inventory is needed.

Therefore, all inventory must be generated prior to sale, so we require some method to help us anticipate the appropriate amount of inventory.

Everything that we will examine about inventory applies to each individual product, to each SKU (stock keeping unit).

But how much inventory is adequate?

Knowing that inventory costs money, the answer begins with the words "minimum possible." But knowing that the inventory generates the sales, our answer must contain the objective as well, to satisfy the “maximum expected demand”.

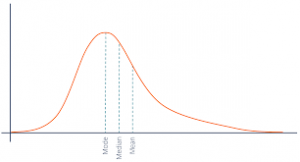

Demand fluctuates, and if our inventory matches average demand, shortages will very often occur and we will lose sales. The shortages (also called "breaks" or stock outs) are precisely what we want to avoid with inventory.

Lastly, inventory is required to satisfy sales before another replenishment arrives.

So, our "formula" to calculate the adequate inventory, or we can also say optimal is:

The minimum inventory is required to meet the maximum expected demand before the next replenishment.

When does the next replenishment occur?

We already see that the time between one replacement and another is a fundamental element in our formula. We can express the "maximum expected demand" as the daily average multiplied by the replenishment time and multiplied by a safety factor.

If time grows, inventory grows. And vice versa. We will see that this fact is an important part of a new way of managing inventories, but first let's understand how most supply chains work.

A replenishment order can be: a production order, or a purchase order, or a dispatch order. In all cases, an “order” is a decision, which is very good news, because we can do something different if we want to.

Before we go on with our deductions, give some thought to the fact that inventory is a result of this decision. If your company is not happy because they have excess inventory and at the same time they have shortages, do not forget that this is a result of the replenishment decisions taken days ago.

When does the next replenishment take place? The answer is now obvious: when we decide.

How do you decide today when to replenish?

When searching the internet, some articles appear such as: https://blog.nubox.com/empresas/reposicion-de-inventario, https://biddown.com/no-sabes-cuando-pedir-mas-stock-calcula-reorder-point-rop/, https://www.mheducation.es/bcv/guide/capitulo/8448199316.pdfand several others, which have some things in common:

- All emphasize the importance of good inventory management for good profitability.

- All of them mention some kind of reorder point, some are explicit with the MIN/MAX method and also with the economic replenishment batch.

As an anecdote, NIKE publishes this page https://www.nike.com/cl/help/a/disponibilidad-del-producto-gs to say that the product you are looking for and didn't find will be available when it is in stock... it didn't help me much, to be honest.

And looking at books and syllabi, we see that MIN/MAX and EOQ (economic order quantity) are recurring as methods for deciding when and how much to replenish each SKU. Let's take a look at these concepts.

The MIN/MAX method and EOQ

In agreement with everything said above in this article, the objective of the method is to have availability at the lowest possible cost.

The method consists of determining a minimum number of units in inventory that must satisfy sales while our next order arrives. This is why this minimum quantity is sometimes called ROP (reorder point).

And the quantity to be ordered will complete a maximum of units. In general this quantity has been calculated with a formula that optimizes costs and results in an economical replenishment batch.

Let's look at each of these things in more detail and what effects using the method has.

This figure represents in theory what the method says, but note that there are two unrealistic elements in the graph: 1) in each replenishment it appears as if the order arrived the same day it was ordered; 2) there is perfect regularity in the demand.

The reality is much closer to this other graph:

Between the time the order is placed and the replenishment arrives there is a supply time, it is not instantaneous. And consumption or demand is variable. The former explains why inventory can run out. And again, if replenishment were instantaneous, we would not need inventory.

But I want to dwell for a moment on the variability of demand. As you can see in the graph, when replenishment is done by setting the ROP or MIN at a fixed quantity, and demand is variable, what you see here occurs: the time between one replenishment order and another is variable.

Let's revisit what we already know: the inventory needed to generate sales depends on the replenishment time. Therefore, if the replenishment time changes over time, but the inventory does not, then the inventory held is almost always wrong, with a bias towards excess.

That is, the MIN/MAX method, so popular in academic programs, is a method that leads to always having wrong inventories (except when demand has little variability).

One of the elements of a solution to the chronic inventory problem, i.e. the problem of having excess and shortages simultaneously, is to set the frequency of replenishment.

If we set the frequency, the MIN is no longer relevant. The MAX will be the quantity we have to maintain, but if since the last order there were few sales, the quantity to order will be much less than the EOQ - economic order quantity.

The EOQ quantity is calculated with a formula involving cost of shortage and cost of storage plus cost of generating an order. The concept is that if the quantity is large, the cost of storage is higher, but the cost of generating orders is lower (fewer orders per year).

First, the cost of the shortfall is very difficult to estimate and is likely to be much higher than estimated. There are two aspects that are underestimated. The first is that shortages can detract from reputation and that reduces future demand. And the second aspect is that a shortage affects sales in a different way if it lasts longer or shorter.

In general, it can be said that the Pareto principle, the 80/20 principle, also applies to sales. This principle says that 20% of the factors are responsible for 80% of the result. The numbers 80 and 20 are references to indicate asymmetry.

In one case I knew well, the 5% shortage was generating 30% lost sales. I know this because when that 5% was eliminated, sales increased 40%. (Note that out of a total of 100, 70 were being sold; by increasing 40%, 70 x 1.40, this gives 98).

Therefore, the EOQ formula greatly underestimates the shortage cost.

But in addition, the costs of warehousing and order generation are usually sunk costs, or fixed costs, however you want to look at them. The first is the cost of warehouse space. And this becomes variable only if we grow inventory above a certain level. And the cost of generating orders is made up of people's salaries, which do not change if you place more or fewer orders. For practical purposes, the marginal costs of these two components are close to zero. Translated with www.DeepL.com/Translator (free version)

When applying the formula now, the resulting EOQ amount is very small, so it is irrelevant.

What is said for the cost of generating orders is valid for transportation and for production, where setups rarely have a real cost.

Therefore, the MIN/MAX method and the EOQ batch are fallacies, which lead to poor inventory replenishment decisions.

The TOC alternative

TOC stands for Theory Of Constraints, created by Dr. Goldratt, and its principles also apply to inventories.

Taking the definition of the beginning, our objective will be to have the minimum inventory to satisfy the maximum expected demand before the next replenishment.

I will first explain the generic solution and then distinguish some cases.

As I mentioned, the first thing is to SET THE FREQUENCY. This is a decision, not a result. So this decision reduces the variability of the replenishment time drastically.

The second is to ignore the optimal batches and bring the frequency to the maximum reasonable (we will see what reasonable means when distinguishing cases), so that the time between orders is reduced to the minimum possible. As the inventory is proportional to the replenishment time, the resulting inventory is smaller, occupying less space and trapping less money.

Now, with less money invested, we have inventory for more than 98% of the demand cases, raising our fill rate to almost 100%.

The method consists of replenishing with the set frequency only what is missing from our target inventory, which in TOC jargon is called Buffer.

How do we know that the buffer is the right one?

The first buffer for each SKU must be estimated. There are varied ways to do this and they are found in the TOC literature. But it is not relevant to make a very accurate calculation for this initial state, so I recommend a simple formula. I personally prefer a moving sum of the last X days for about 3 to 6 months, where X is the number of days corresponding to the replenishment time. The replenishment time should include everything: the days between one order and another, and also all the supply time (production and transport). The buffer is the maximum of these sums. Translated with www.DeepL.com/Translator (free version)

But the demand for a SKU can change, so the buffer must also change. Dynamic Buffer Management is the TOC technique to automate this procedure whereby the individual buffer of each SKU follows the actual demand. It is color-based, has certain rules, and consists of increasing the buffer by one-third when it detects that inventory is being consumed faster than it is being replenished. And it is reduced by one third when it is detected that consumption has slowed down. Translated with www.DeepL.com/Translator (free version)

Distinguishable generic cases

There are three cases that are worth distinguishing in general:

- Stocking points or points of sale of the same chain

- Central warehouse or locally sourced distribution center

- Central warehouse or distribution center supplied with imports

The first case corresponds to nodes that belong to us, so we have total control over their operation. In general, these points can be replenished daily, which leads to a significant reduction in inventories, and at the same time it is rare to maintain a shortage for more than one day. The criterion is to reduce the time to a minimum; if not one day, then two or at most three.

If, for example, we have several points of sale in a city far from the distribution center, where a truck is sold every three days, it is possible to make a trip every three days to that city delivering to each point of sale. When these grow in number, it may be better to have a regional warehouse serving that and other nearby cities, following the same principle.

When the nodes belong to us, it makes no sense that replenishment cannot be done with high frequency. In fact, today trucks must go very frequently, but not to replenish SKUs that were sold yesterday.

The second case is a warehouse that sources from its own production plant or from local suppliers. In both cases (for different reasons), placing daily orders is an exercise in futility.

In the case of production, it will be normal for the schedule not to accept orders to produce the same SKU several days in a row, because that would lead to wasted capacity in the constraint (see article https://blog.goldfish.cl/produccion/refutacion-al-balanceo-de-lineas/).

And if local suppliers receive purchase orders for the same SKU every day, they will most likely consolidate all those orders to be shipped once a week.

For these reasons, my recommendation for this second case is to set the frequency to one weekly order per SKU. This leads to dividing the SKUs into five groups (this is an example), and we will have Monday's and Tuesday's, and so on. The replenisher should only complete buffers from the day's group.

The third case is the one that has given me the most food for thought. I haven't explicitly said so far, but you may have noticed that this method disregards forecasts: replenish only what was consumed and dynamically adjust the buffers.

The forecast contains errors, sometimes underestimating demand and sometimes overestimating it. The smaller the population served by a node, the greater the relative error. That is, a store serving 5,000 people requires more inventory per capita than a warehouse serving 50,000 people. This method of frequent replenishment reduces inventories at the nodes with the highest error.

This phenomenon of reducing the relative error as the population grows is called statistical aggregation, and is very well studied mathematically. Statistical aggregation also occurs as time lengthens. The problem with this, as we know, is that the inventory grows proportionally.

The third case, where the distribution center is supplied by imports, is one where the replenishment time is naturally long. First, the transit time cannot be shorter without raising the cost (going from sea to air, for example). But in addition, to fill containers, it may take a week or two to sell. These two factors mean that replenishment time cannot be reduced for anyone, i.e., competitors have the same conditions.

As we can see, by having statistical aggregation for the long time, and also having the maximum population statistical aggregation, the demand forecast error for this particular case is much lower in relative terms.

However, setting the frequency per SKU will have the same benefits as described above. However, the buffer adjustment method can be modified, incorporating forecasting techniques, making this method even more robust.

Conclusions

Both industry "best practices" and academic program content are backward in many places, and the proof is there for all to see. Just go to a supermarket or store with a shopping list of 10 items, how often do you find the entire list? And even then, the store is full of inventory. Do another test; look at the production date of something non-perishable that is produced in the country and you will find that it is several weeks since it was produced and you took it in your hands. That speaks of excess inventory.

There you have the result.

On the other hand, supply chains that have adopted TOC to transform themselves have reduced inventories and raised their service levels by close to 100%.

You can always improve a lot more; but for that you need to acquire more knowledge. I hope that has happened to you by reading this article.